A 🌈 magical 🌈 AWS serverless developer experience

Find out how we build serverless applications at Plain and what makes our developer experience truly 🌈 magical 🌈.

This article was originally published on Plain's Journal • A magical AWS serverless developer experience.

Check out the modern customer service platform we're building at Plain.com

A common question developers bring up when wanting to develop serverless and cloud native applications is: what will the developer experience be like? It is an important question as a good developer experience with a quick feedback loop results in happier and more productive developers who are able to ship features rapidly.

Since we’re building Plain to be intentionally small, an outstanding developer experience is a must. We need to make sure that the few engineers that we do hire can make the most impact by delivering product features quickly while maintaining a high quality.

We had the opportunity to think about how to solve this problem in 2021 as Plain is built from scratch. When we were deciding our general tech stack, the experience of making changes on a daily basis played a large role in the decision-making, as well as how we’ll be able to build a successful business in the next 5 to 10 years without needing to do foundational replatforms. This meant being able to run our services at scale at a low cost without needing to have a whole department just looking after a homegrown infrastructure.

The rationale behind these decisions definitely requires its own separate post, but we ended up deciding to go all-in on serverless and cloud native, full-stack TypeScript, and using AWS as our cloud provider, due to its maturity and popularity. We decided that using proprietary AWS services is an acceptable lock-in as there’s high value gained compared to the likelihood of switching cloud providers. I’ve definitely seen companies spend huge amounts of effort trying to be cloud-agnostic, without actually realizing any tangible benefit from it.

What’s unique about serverless development

There are some unique aspects to developing and testing serverless applications. One of the main differences is that you end up using a lot of cloud services and aim to offload as much responsibility to serverless solutions as possible.

In the case of AWS Lambda this means that you typically end up using API Gateway, DynamoDB, SQS, SNS, S3, EventBridge, ElastiCache, etc. to build your application. Using so many services involves a lot of configuration, permissions, and infrastructure that needs to be developed, tested, and deployed. If you only focus on testing your lambda code then you’re skipping a large part of your feature. Examples that you might encounter if you don’t verify your infrastructure:

- missing an S3 trigger to SQS or a Lambda function

- missing an EventBridge rule to route events to the right targets

- missing a Lambda IAM role update to use a new AWS service

- incorrect CORS or authorizer configuration in API Gateway

One of the most important questions to answer is: when do you want to find out about these mistakes?

- While writing and running your tests?

- While working on a feature and the developer manually trying out their feature?

- In your Continuous Integration run via some E2E integration test suite?

- In a shared deployed environment, such as dev or staging?

- Or in the worst case scenario: in production?

We decided to do it as soon as possible: while writing and running tests. What this means is that the debate of “should you mock cloud dependencies or embrace the cloud” was not really a question. Having our Lambdas use AWS mocks or some localhost emulation still leaves a lot to be desired in terms of “will it actually work” when deployed. Gareth McCumskey’s Why local development for serverless is an anti-pattern blog post captures the "emulate vs. use the cloud" debate quite well, and I’d highly recommend reading it.

The largest implication of developing against the cloud is the need for internet access to effectively write code. While this might be an unacceptable trade-off to some companies or people, for us as a remote-first company, we require internet access to communicate with our colleagues therefore there would be very few times when people didn’t have network connectivity.

With the general principle that we want to be developing against the cloud and not trying to build a local developer experience, we set out evaluating various tools and technologies to find out what works for us.

The 🌈 magical 🌈 stack

So what does our magical AWS serverless developer experience look like? At a high level the following make up the key components:

- Every developer has their own personal AWS account

- AWS CDK to develop our infrastructure and Serverless Stack (SST) to get a very quick feedback loop

- Writing significantly more integration tests than unit tests

- Full-stack TypeScript

Adopting these technologies and practices yields a pretty brilliant developer experience.

Personal AWS accounts

When going full serverless, each developer having their own personal sandbox AWS account is a must. As previously mentioned, to build most features it’s not enough to write the code, there’s a lot of infrastructure that needs to be developed, changed, and tested. Having personal AWS accounts allows each developer to experiment and develop without impacting any other engineer or a shared environment like development or staging. Combined with our strong infrastructure as code use, this allows everyone to have their clone of the production environment.

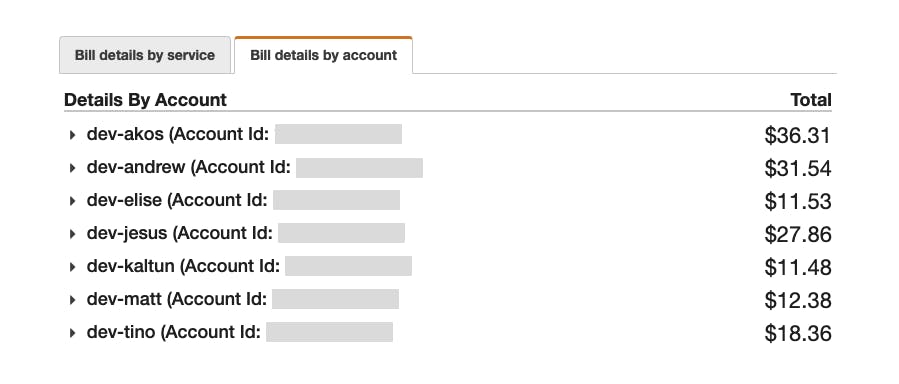

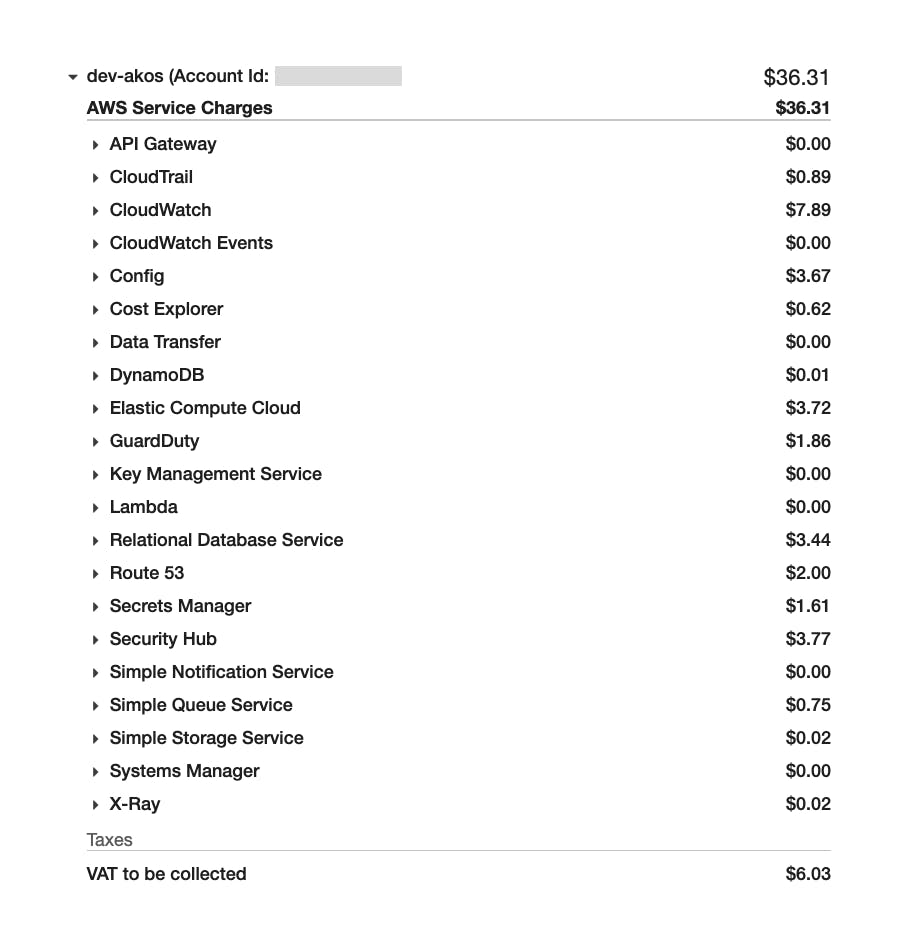

You might be thinking: isn’t that expensive? Won’t we be paying hundreds of dollars to AWS? Nope—not with serverless solutions! The genuinely serverless solutions are all pay per usage, so if your AWS account has zero activity, for example through the night and weekend when engineers aren’t working, then you won’t pay a dime. There are a few exceptions to this, such as S3 storage, DynamoDB storage, RDS storage, Route53 hosted zone, etc. costs, but they tend to be minimal.

For example, Plain’s January bill for our 7 developer accounts was a total of $150—pennies compared to the developer velocity we gain by everyone having their clone of production. Typically, the largest cost for each developer is our relational database: Amazon Aurora Serverless v1 PostgreSQL. It automatically scales up when it receives requests during development and down to zero after 30 minutes of inactivity.

AWS CDK and SST

With all of our features considerably depending on cloud resources, having our infrastructure defined as code and version controlled is a hard requirement. We initially looked at tools like Terraform, Pulumi, Serverless Framework, AWS SAM, but they either required us to learn new programming or templating languages, or the developer experience of the full feature lifecycle wasn’t up to our expectations.

Back in March 2021, we stumbled upon Serverless Stack (SST) when it was still version 0.9.11. What sold us instantly was their live lambda reloading feature and being built on AWS CDK. SST and AWS CDK support TypeScript natively, so it nicely fits into our fullstack TypeScript desire.

Live lambda development allowed us to write our Lambda code and have our integration tests run using live AWS services with a 2-3 second feedback loop. SST replaces your lambda with a shim that proxies down all Lambda invocations via Websockets to your local developer machine, which can invoke other AWS services and returns with a response. The local runtime uses the AWS Lambda execution role’s permissions to make AWS API calls to real services, so we were quite confident that the change would work when deployed to production. Overall this meant that we caught infrastructure issues extremely quickly compared to mocking or emulation.

Live lambda development architecture overview

The implication of this setup is that we can do genuine full-stack development easily. We can point our React frontend application to a personal AWS account’s deployed API Gateway URL and change both the frontend and backend at the same time with both codebases live reloading. Given that everything is deployed and using the same AWS services that the production environment uses, our frontend application completely works without needing workarounds.

While it was slightly risky to choose to build our backend stack on a (at that time) relatively unknown tool, we knew that we had the AWS CDK escape hatch. If we ever encounter something that SST doesn’t support or we don’t like, we could instead use a very mature AWS CDK construct. This gave us the best balance between a fantastic developer experience by SST with the maturity, feature richness, and first party support of AWS CDK.

Serverless Stack also has some incredible features like:

- Dropping break points into your Lambda code and debugging in your local IDE. This is helped by the

--increase-timeoutflag which increases all Lambda timeouts to 15 minutes. If you’re interested in this check out the docs or this video. - Detecting infrastructure changes and prompting you to deploy them, i.e. getting as close to live reload as possible. Deployments still take a bit of time as it’s Cloudformation under the hood.

- A web based console (SST Console) to visualize your stacks, Lambdas, S3 buckets, as well as the ability to replay individual Lambda events.

- Auto-exporting removed Cloudformation stack outputs: we ran into this multiple times before and it was a pain as we sometimes noticed too late.

- An ever-growing library of constructs

SST’s Slack community has also been very helpful whenever we encountered issues, had questions, or feature requests. Frank, Jay, Dax, and the community are always happy to help. I’d highly recommend giving SST a try as it’s hard to find anything that works so well.

Testing

Early on we had an ambition of having full confidence in our test suite. If our CI goes green then it should be safe to deploy that change to production—which is exactly what we do on merge to the main branch. To achieve this we decided to focus our testing efforts on a robust integration test suite rather than unit testing individual lambda functions or small code blocks. This may seem like bad practice or going against the conventional testing pyramid. But when we encounter step-change innovation, such as serverless, it’s important to question existing practices to see if those practices still make sense.

To be absolutely clear: we do write unit tests where it makes sense. Where we have some business logic or calculation then we do write an exhaustive unit test suite. An example is our core customer state machine having a unit test for all possible states and state transitions. But unit testing things like SQL queries, AWS API calls, or our GraphQL requests is definitely off the table as it yields little real-world assurance. What you end up testing is a lot of implementation detail and maintaining high quality mocks or emulation is a lot of effort that is rarely worth it.

To put it in numbers, our current test suite ratio is about 30% unit tests and 70% integration test cases.

Our integration tests are designed and written in a way in which they’ll be reasonably quick and test for behaviour and not for implementation. What this means is that we try to avoid asserting on internal implementation details, such as the data stored in DynamoDB or RDS. Instead we focus on verifying externally (from the Lambda’s point of view) visible behaviour, such as API responses or events being published. For our events, we draw the line at testing only that an event has been published and not asserting all downstream consumers. We write separate integration tests for each consumer. It also requires us to maintain sensible domain boundaries in our code to make sure that each domain can be tested independently.

This way of writing tests also has the benefit of being able to be run against shared environments. We currently have a full integration test suite running against our development environment on post-deploy merge to main and on a schedule to detect flaky tests. There’s nothing stopping us from also running these exact same tests in our production environment as well. In theory, we could delete 100% of our code, rewrite all of our Lambdas in Delphi and as long as our integration test suite passes we could ship it to production. (Note: we’ve yet to try this and don’t plan on it anytime soon).

A typical GraphQL API integration test for a query or mutation roughly does the following:

- Request a user from a pool of authenticated users (we ran into some quotas and limits with our identity provider)

- Create a new workspace so that there’s a clean state

- Set up the state for the test, such as create a customer, send a chat message, etc.

- Make the GraphQL query

- Assert the GraphQL response

- In the case of mutations: assert any events that should have been published

describe('create issue mutation', () => {

it('should create an issue', async () => {

// Given: workspace + customer + issue type

const testWorkspace = await testData.newWorkspace();

const ctx = await testData.testAggregateContext({ testWorkspace });

const issueType = await issueAggregate.createIssueType(ctx, {

publicName: 'Run of the mill issues',

});

const customer = await customerAggregate.createCustomer(ctx, factories.newCustomer());

// When we make GraphQL Mutation

const res = await testWorkspace.owner.graphqlClient.request(CREATE_ISSUE_GQL_MUTATION, {

input: { issueTypeId: issueType.id, customerId: customer.id },

});

// Then:

// 1. Expect a successful response:

expect(res).toStrictEqual({

createIssue: {

issue: {

id: jestExpecters.isId('i'),

issueType: { id: issueType.id },

customer: { id: customer.id },

status: IssueStatus.Open,

issueKey: 'I-1',

},

error: null,

},

});

// 2. Expect an event to be published:

await testEvents.expectEvents(testWorkspace, [

jestExpecters.standardEventStructure({

actor: testWorkspace.owner,

payload: {

eventType: 'domain.issue.issue_created',

version: 1,

issue: res.createIssue.issue,

},

}),

]);

});

});

A typical EventBridge event listener integration test would:

- Set up any required state (this is highly dependent on the exact Lambda)

- Publish an EventBridge event onto a bus

- Wait and expect the side effects, which could be:

- Another EventBridge event being published

- State in a datastore being updated (i.e. in DynamoDB, RDS, S3)

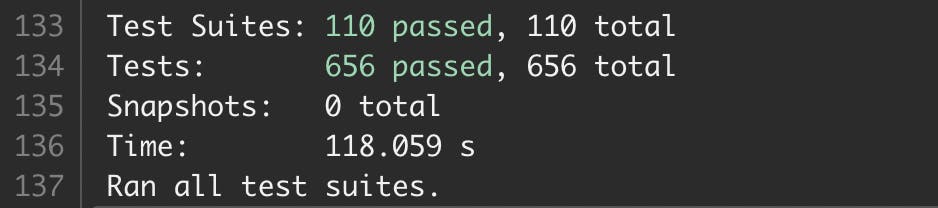

If you’ve ever written any integration test you must be screaming in your head: running all this must be so slow! They are definitely slower than unit tests, but not unbearably slow. Since all the services we use are serverless, and we make sure our integration tests have 0 shared state we’re capable of running all of our tests in parallel. We haven’t gone to this degree of optimisation, but for example our CI with a parallelism of 40 runs 656 test cases across 110 test suites in a bit under 2 minutes exhaustively integration testing every corner of our application.

Integration test flakiness is another thing we actively tackle by running our tests on a schedule during the work week. If we ever get a test failure, we jump on the issue and track down the root cause of it. This also involved rethinking and restructuring how we test certain things, like GraphQL subscriptions, in a way which is robust and reliable.

We only just dipped our toes into our integration testing setup, this topic is definitely worthy of its own post. That said, given that our API is a key part of our product, having every GraphQL query and mutation integration tested is crucial. We think the trade-off of having a slightly slower test suite and a much higher confidence that the feature or change will work correctly is worth it.

Full-stack TypeScript

While using full-stack TypeScript isn’t strictly necessary to have a great developer experience on AWS, it really makes our team much more effective. The ability to move between the frontend, backend, and infrastructure code without having to learn a different language is invaluable to every member of the team.

You still need to learn the AWS services when developing backend code, but this is natural when working with anything. You likewise need to understand CSS / HTML to develop frontend web applications. With SST and CDK in TypeScript, after you’ve figured out what AWS services you’d like to use the TypeScript types and the editor’s autocomplete guide you in defining the correct infrastructure.

We have most of our backend codebase in a single monorepo and use a handful of libraries such as pnpm, zod, true-myth, swc to make our code even better to work with, but more on that in a future post!

Putting it all together

So what does this look like in practice? Let’s take a look at what making a change looks like:

In this example we created a workspace in Plain via our core GraphQL API. This verified that E2E the API call works:

- User fetched a valid JWT from our identity provider

- AWS API Gateway handled the GraphQL request and verified the validity of the JWT

- The GraphQL Lambda created a new workspace in our Aurora Serverless PostgreSQL database and published an event to EventBridge

- This verified that the Lambda has the correct IAM permissions to read/write from PostgreSQL and publish to EventBridge

- A successful response was returned to the client

Conclusion

Combining these technologies and practices means we can focus on shipping features:

- In isolation without impacting other engineers due to everyone having their own AWS account.

- With a quick feedback loop using live AWS services knowing that it will work when deployed, thanks to SST and live lambda development

- Easily develop serverless infrastructure with CDK

- Have high confidence in correctness, thanks to our integration testing

- Not having to learn a different programming or templating language when switching between frontend, backend and infrastructure.

Could it be better? There’s definitely room for improvement, but I think this is already quite 🌈 magical 🌈! If you have any questions or know of ways we can make our stack even better, get in touch with us on Twitter at @builtwithplain or me at @akoskrivachy.

If you’re interested in working with our 🌈 magical 🌈 tech stack check out our current opening on Plain’s Jobs page.